Section Review

- Rules of probability, Bayes’ rule

- Distribution: conditional, joint, marginal

- Maximum likelihood estimation

- Note the distinction between probability and statistics

- Probability : we’re given the parameteres, we want to study the distributions

- Statistics : We’re given the data, we want to estimate the parameters (and also, test hypotheses)

- Point Estimates

- when doing MLE, we end up with a single value

- Suppose we have two estimates(e.g. CTR A vs CTR B)

- How can we sure A is better than B?

Frequentist to Bayesian

The Bayesian asks, “instead of a point estimate, what is the distribution?”

- Frequentist : $\hat{\mu}$

- Bayesian : $P(\mu | data)$

From a distribution, we can find intervals (not called confidence intervals)

We can ask : “What is the probability that A is better than B?”

- Frequentist : point estimate, fixed but unknown parameters

- Bayesian : Those parameters are not fixed, but are also random variables

- and it’s why we use data to estimate the distribution of random variables

The Bayesian asks, “instead of a point estimate, what is the distribution?”

- Frequentist : $\hat{\mu}$

- Bayesian : $P(\mu | data)$

From a distribution, we can find intervals (not called confidence intervals)

We can ask : “What is the probability that A is better than B?”

- Frequentist : point estimate, fixed but unknown parameters

- Bayesian : Those parameters are not fixed, but are also random variables

- and it’s why we use data to estimate the distribution of random variables

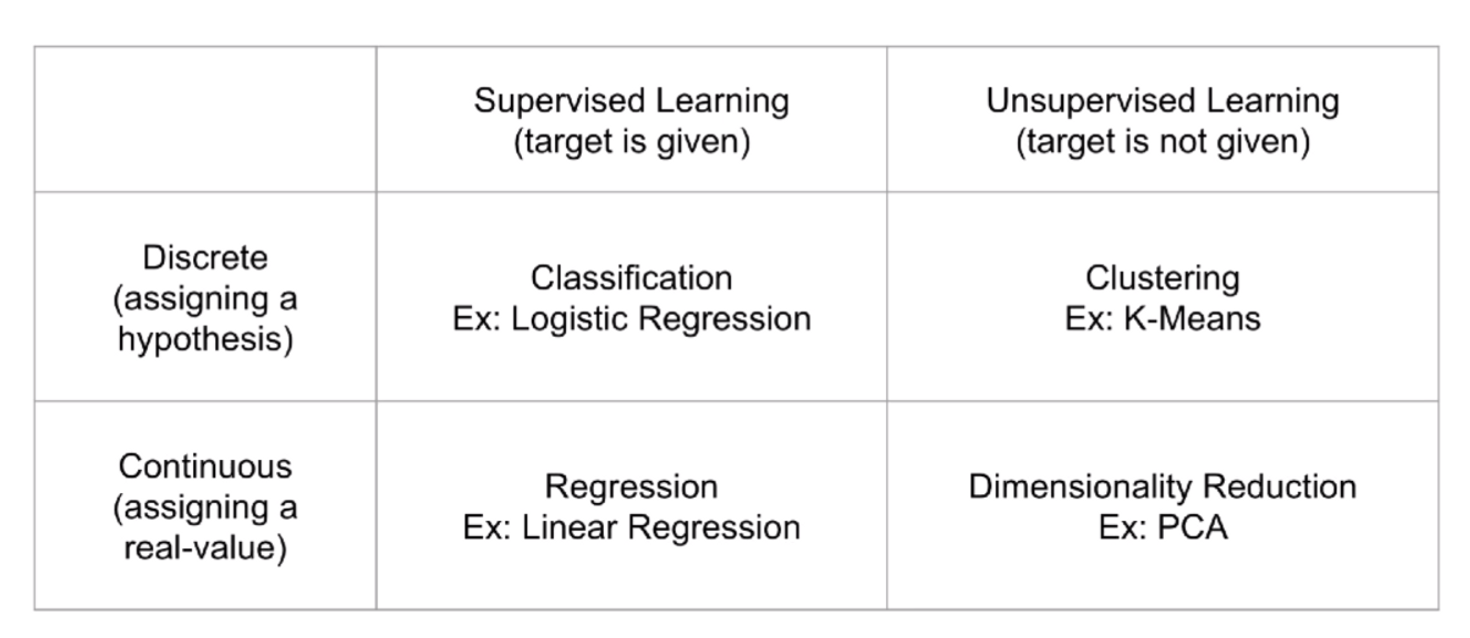

Misconceptions about Statistics vs. ML

Definition of Machine Learning

- ML is when we build a model where the parameters of the model are learned from data

- Linear Regression also uses MLE

- Deep nueral networks also use MLE

- Statistics and ML are not as clear as you thought

- What is reward signal ? maximize clicks, donations, purchases, etc. (a.k.a. rewards)

- Recall the application (ex. Online advertising)

!! We want to build a model that maximizes rewards !!

Broad Definitions of Machine Learning

- ML is when we build a model where the parameters of the mdoel are learned from data

- Online learning

- Static methods (e.g. neural networks) train once on a dataset, then never change

- Online learning acts in real-time

- Data is ingested one sample at a time, parameters are updated each time

- The algorithm becomes smarter and smarter for each sample it collects

- There’s no need to name. Don’t try to distinguish statistics and ML.

'Statistics > A&B Testing' 카테고리의 다른 글

| [그로스해킹] 그로스해킹 시작부터, 성장 실험까지 (0) | 2024.07.12 |

|---|---|

| Bayes Rule and Probability Review (2) (0) | 2023.02.18 |

| Bayes Rule and Probability Review (1) (0) | 2023.02.18 |

| Introduction and Outline (0) | 2023.02.18 |